LLaVa

llava.hliu.cc represents an open-source project merging computer vision and natural language processing to craft a multimodal AI assistant. Its primary objective is to develop expansive language and vision models capable of understanding and reasoning about both images and text, ultimately advancing general intelligence.

This project links the CLIP visual encoder with the Vicuna large language model, creating LLaVA (Large Language and Vision Assistant).

LLaVA has exhibited strong performance on benchmarks, approaching GPT-4 capabilities for multimodal tasks:

- It achieves an 85.1% relative score compared to GPT-4 for following visual instructions on an evaluation set.

- LLaVA + GPT-4 sets a new state-of-the-art for the Science QA dataset, achieving 92.53% accuracy.

Pricing Model:

FREE

How to Utilize

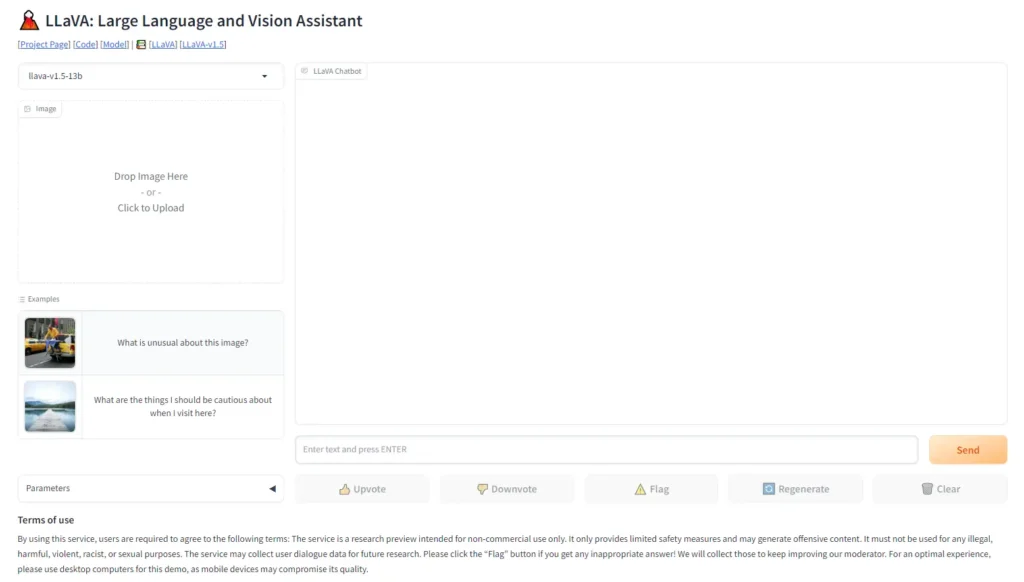

llava.hliu.cc offers a web demo for free non-commercial use, along with code, models, and documentation on GitHub:

- Web Demo: Users can upload an image and pose questions to LLaVA about it, with the option to select different model sizes.

- Code: Implementation of model architecture, training scripts, and APIs.

- Models: Pretrained checkpoints for 7B and 13B parameter models.

- Docs: Detailed instructions for using APIs, model training, and contribution guidelines.

Researchers or developers can leverage LLaVA for their projects and applications.

Pros and Cons

Pros:

- Cutting-edge multimodal model fusing vision and language.

- Strong performance approaching GPT-4 level.

- Free access to web demo, code, and models for non-commercial use.

- Customizable – users can train their own models using provided scripts.

- Promising direction for more general AI capabilities.

Cons:

- Still in an early research phase with limited safety measures.

- Occasional generation of offensive or nonsensical content.

- Accuracy still below proprietary models like GPT-4.

- Requires expertise to train and run own models.

- Primarily intended for research purposes.

Pricing

Access to the llava.hliu.cc platform is free for non-commercial use cases, encompassing the web demo, source code, pretrained models, and documentation. For commercial use or custom solutions, consultation with the research team is necessary. No specific pricing plan is provided.

Conclusion

llava.hliu.cc showcases promising capabilities as an open-source multimodal AI assistant merging vision and language models. While still in its early research stage, it exhibits the potential to approach abilities seen in proprietary models like GPT-4.

For those interested in experimenting with cutting-edge AI, llava.hliu.cc offers extensive access to tools and models. However, safety and accuracy aspects are still evolving. Over time, models such as LLaVA could pave the way for broader intelligence across various modalities.

Share This Tool: